Let's talk about Animation Quality

02/10/2024

Attending both CVPR and SIGGRAPH this year it was interesting to observe and discuss the difference between how vision and graphics researchers approach the field of animation. There was a sense from some researchers that the reviews from the graphics communities like SIGGRAPH could be too tough, and rejected papers based on petty irrelevant issues like shadow quality. While on the other side there were feelings that the vision community are willfully ignoring problems in their results while marching forward to develop new models. And I think both of these feelings really come to the forefront on the issue of animation quality, so I thought it might be a good opportunity to examine that subject a bit more.

High Quality Animation Data

I think one of the best ways to start looking at animation quality is to look at some of the different sources of animation data in the community and compare them. For example, here is a video of some data from the Motorica Dance Dataset which is one of the highest quality publicly available datasets of motion capture in the graphics community:

Here is another animation, this time with the skeleton visualized.

This is the kind of data that is often typical in the AAA game and VFX industry. This data is sampled at 120 Hz, with finger and toe motions, captured with an optical system which can triangulate marker positions with sub-mm accuracy and solve onto a skeleton with realistic proportions and carefully positioned joints that accurately fit the actor. It's easy to see all of the detail and nuance that exists in data like this. The foot contacts are perfectly clean, there is no perceptual noise or foot sliding, and when skinned by a talented artist onto a well made character you get something that looks very natural and realistic with all sorts of subtle details.

When I look at this kind of animation data I see it as a target. Whatever animation systems we build should aim to generate animation of this quality. But I also see it as a kind of ceiling - because when we are evaluating our methodologies, the maximum quality of the data is a bit like the sensitivity of our measuring device - once the differences become indistinguishable we can't evaluate them reliably anymore.

So let's start by trying to work out some basic facts about this kind of data. What sort of precision is required when capturing, storing, or generating it?

Temporal Resolution

I'll start with the temporal dimension... and I'll keep my analysis stupidly simple here... because if you talk to someone who actually knows about signal processing, they will tell you that almost everything we do when it comes to handling the temporal aspect of animation data is already wrong - from up-sampling to down-sampling and interpolation.

(For an accessible intro for graphics people as to what exactly is wrong I highly recommend Bart Wronski's blog or the classic A Pixel Is Not A Little Square. I'm also committing a bit of a sin here by drawing lines connecting the data-samples in my graphs... but all of that is a subject for another day.)

Anyway, if we take this motion from the dataset:

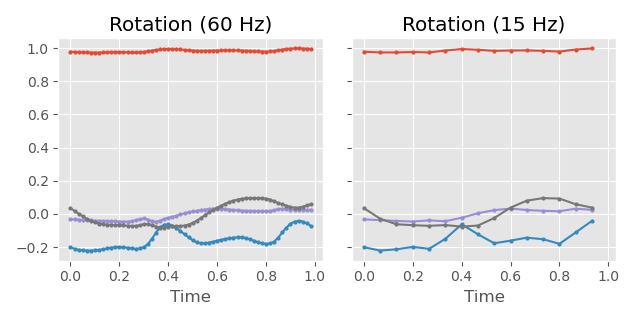

And plot a one second window of the shoulder rotation over time...

...we can see that even at 60 Hz the signal is not entirely smooth. Once we are down to 15 Hz we have a signal that is just obviously far too coarse to capture the nuances of the motion.

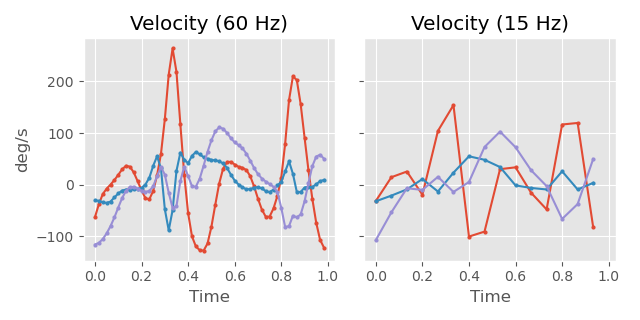

If we plot the velocity of these signals (computed by finite difference) you can see we can get differences of over 90 degrees per second in the velocity we compute between the 60Hz signal and the 15Hz one.

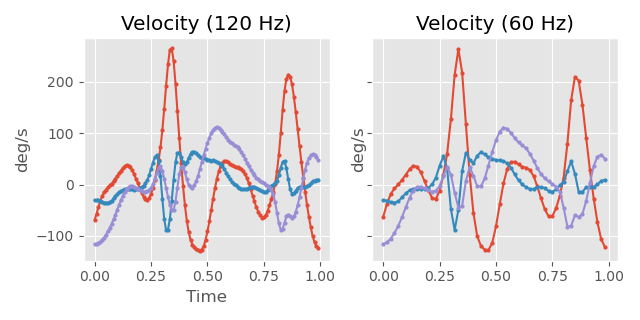

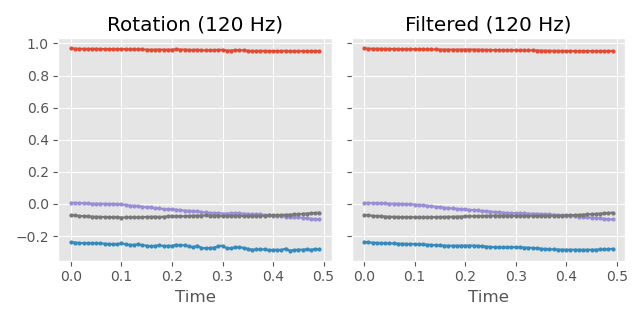

Also take a second to note how quickly the angular velocities can change in this kind of data. Here we go from a velocity of around +150 degrees per second in one direction to -120 degrees per second in the opposite direction, within the space of 0.2 seconds. In fact, I would argue that even at 120 Hz, when we have these large peaks, the velocities are not really smooth at this sampling frequency:

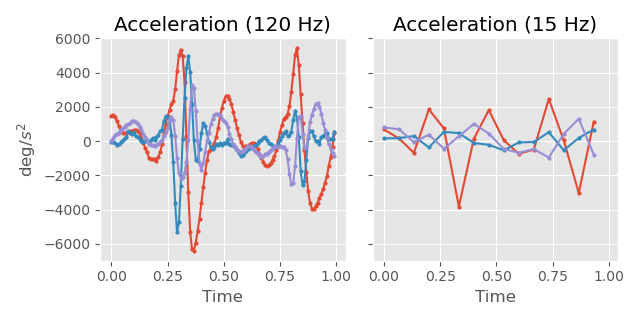

The differences in acceleration are therefore obviously even worse, and completely degraded for the 15 Hz signal:

Here is an overlay of a clip of animation sampled at 60 Hz, and the same clip downsampled to 15 Hz (by taking every 4th frame) and then linearly upsampled again.

At normal playback speed, most of the time the visual difference is fairly minimal. However if we slow the playback down we can see issues: the temporal down-sampling has introduced errors of at least several centimeters in some places.

The fact is this: the reason we often deal with animation data sampled at 60 Hz is not because no higher frequency information exists and this is a sufficient frequency to record losslessly all the information in the data, but because the rate monitors refresh at is 60 Hz. As I described in my article on cubic interpolation of quaternions - as soon as we start to sample the data at other rates, unless we are careful, sampling frequency artefacts can re-appear very quickly.

And I think we can conclude that although we can sometimes get away with displaying animation at lower sampling frequencies than 60 Hz without much perceptual difference, if we really want to hit the motion quality shown above, and also in terms of storage and processing, 60 Hz is already kind of the minimum we should be aiming for if we want to preserve the temporal information in the data - and that if you are planning on doing any kind of real signal processing on your data (such as frequency transformations, low-pass filters, or even extraction of velocities, accelerations, or torques) you should try to aim for higher resolution if you can.

If you want a ballpark figure, this paper provides a more detailed analysis and suggests that to preserve 90% accuracy for the acceleration information at least 180 Hz is required when it comes to human motion.

Numerical Precision

So that is temporal resolution. What about in terms of the numerical precision of each frame?

Here is a quick experiment that shows how important this is too. This is what our animation data looks like if I round all the quaternion floating point values to three decimal places and then re-normalize before performing the forward kinematics step:

As you can see, with three decimal places of accuracy, the quantization error is pretty obvious (take a look at the head) and we see the character jumping discretely from pose to pose. So numerically we also need a pretty high amount of precision in our floating point values to represent each pose.

Here is another similar experiment. In this case I masked out the bottom 16 bits of the floating point mantissa.

The error is again pretty obvious. So to get perceptually accurate motion we probably need something like 10 of the 23 bits of the floating point mantissa to be correct.

Obviously a huge part of this is the error propagation that we get down the joint chain... but either way, it means if we have any kind of numerical system that processes, generates, or in any way touches the local joint rotations in animation data we should make sure it is numerically accurate to as many decimal places as possible. For example, 16-bit floats are probably a no-go for animation generation if we want any chance of achieving the quality of animation shown at the beginning of this article - they are only accurate to at most five decimal places in the zero to one range (they have a 10-bit mantissa) which leaves extremely little room for error.

Take a moment to consider how different this is to the image-generation domain where images are usually stored with just 8-bits of precision per channel. Not only does this mean they require much less precision as 8-bits means pixel channels can only take one of 256 values, but since humans are so much less sensitive to pixel color differences we don't even really need this - noise, quantization error, and other artefacts are often not even noticeable to the human eye anyway. As a ballpack figure I would say animation generation needs to be at least one hundred times more numerically accurate than image generation if we are dealing with local joint rotations.

We can do a simple experiment to show this. Here I add Gaussian noise to the quaternions of the local joint rotations with a standard deviation of 0.001 and then re-normalize. As you can see the motion it totally broken:

On the other hand, starting with this image...

Here is what I get if I add noise with a standard deviation of 0.01. The different is barely noticeable...

So to summarize: if we want to produce animation with the quality shown at the beginning of this article, 60 Hz is really the minimum frequency we should be dealing with when it comes to the temporal resolution, and we need numerical accuracy of at least five or six decimal places on our local joint rotations. Anything less than that and we can already expect to be producing visible errors.

Data Issues

Now that we have an idea of what high quality motion capture data looks like and what the constraints are, let's take a look at some of the other data sets in the community.

First a brief disclaimer: my goal is not to be critical of these datasets. They have been incredible workhorses for the vision and graphics communities and I really appreciate all the work that has gone into them from so many people. I've used them extensively in my own papers! My goal here is simply to talk about some of the issues you might face when trying to use them as a researcher.

For example, let's look at some data from the MOYO yoga part of the AMASS dataset. Here I have extracted the skeleton via the regression method. I'm going to put it on a skinned character too, but first let's look at just the skeletal data itself:

We'll start with the obvious problems and the first one is right there - the rest pose:

I'm unconvinced that the actor had their knees bent outward like that when they were doing a T-pose. That means we already have some kind of constant systematic error in our data when it comes to joint rotations and positioning. Not only is that going to make it difficult to skin and retarget this data but having such a large systematic error right from the start doesn't give us much confidence regarding what else might have gone wrong with the capture and processing.

The next most obvious issue is that the character's feet penetrate the floor. This fix is more simple - we just need to vertically translated the character in all the data. No big deal - but it does mean we can't just naively throw this data into a network along with a bunch of other data without manual correction and cleaning.

Third is that the data is missing hand, finger, and toe movements - although that is pretty common for most publicly available datasets.

If we browse a few of the clips in this dataset we can see there are many places where we have glitches and jitters:

All of these sections should be trimmed from the database before training. These kind of glitches and outliers can be really damaging to training of a neural network and often causing exploding gradients and NaNs to appear in losses. Techniques like gradient clipping often hide/handle these kinds of data issues but even with that, there can be big negative affects on things such as normalization.

Lots of the clips also have more subtle issues like this kind of low level noise that is very hard to spot (take a look at the spine about 5 seconds in):

We can remove this noise by filtering the data, but as discussed earlier, at a sampling rate of 60 Hz we cannot perfectly capture the fastest component of human motion. This means that there is important motion information encoded in the higher frequencies of our signal. Applying any low-pass filter for denoising would also inevitably erase parts of the actual signal.

For example, if I apply this same Gaussian filter to the Motorica data we can see it can end up modifying the data by several centimeters in places and removes some subtle details. Here look at how the hand motion gets smoothed out:

I've done my best to retarget a medium-sized AMASS skeleton to the UE5 Manny so we can see some of the issues on the same skinned character. This is obviously not a perfect comparison, but nonetheless on a skinned character a whole new domain of artefacts become visible. Obviously we have missing finger and toe motion, but we also can see some joints don't twist properly, sometimes the spine pops in a weird way, we get odd shoulder motion, or the feet slide a lot.

Large parts of the CMU motion capture database (which AMASS contains) and many of the other large databases we use in graphics contain similar issues. Here are a few random clips from the CMU portion of AMASS where we can see all sorts of artefacts. Again, this is not an exactly fair comparison since I struggled to build a retargeting setup which worked well given the variety of rest poses in the data - and of course I have cherry picked some bad examples - but it still gives you an idea of the data.

As I mentioned before - I really appreciate the work that has gone into these kinds of datasets which have been powering the academic community for a long time and led to a huge amount of progress. But these datasets also come with some downsides:

First, data of this quality is simply too far off being practically usable in the video games or VFX industry. This immediately makes it very hard to evaluate fairly the practicality of the proposed method since we simply don't know how it might behave when trained on higher quality data.

Second, when examining the animation generated by a system trained on this kind of data it becomes impossible to distinguish between animation artifacts caused by the method itself, and those which are present in the training data and the system is just faithfully reproducing.

Third, when systems trained on this kind of data report error metrics such as joint position improvements of a few centimeters or small improvements to the FID score, given we know the ground truth data has obvious systematic errors on the order of tens of centimeters as well as other glitches and noise, it brings into question if these kind of metric differences are even meaningful any longer.

Mixed Data

An argument I've heard often is this: Does it really matter if the quality is variable when there is so much more data in databases like AMASS than there are in other datasets? Can't I train a model on a large dataset of low quality motion and then fine-tune it on the high quality data? The mantra we hear a lot in deep learning for this kind of training regime is "Noise cancels out, signal adds up" - which is absolutely true.

I think this is a very interesting argument, and it's an approach which has worked fantastically in other domains (Speech Synthesis being one example). There are a lot of very smart people in the field betting on this kind of idea and I will be interested to see what kind of results it produces.

While I still don't really know if this will work, my gut feels skeptical. In my experience most of the time when you combine two animation datasets, you end up with a multi-modal dataset made up of two clusters that don't necessarily interact properly with each other.

In fact, we can somewhat see this already, because AMASS is a collection of many different datasets and capture sessions. If we look at the rest pose from part of the CMU portion of the AMASS dataset:

And then look at the rest pose from the Yoga portion:

We can see already just by eyeballing that depending on which session the data comes from the whole rest pose is completely different. If we train a model on this data it is going to need to learn how to switch between different styles of rest pose depending on what kind of motion we are asking it to produce as output. These datasets might be on the same skeleton structure but that does not mean they are "combined".

To me, the idea that taking a system trained on low quality dancing data (for example), training it on high quality locomotion data, and expecting it to produce high quality dancing data (and not low quality dancing data) seems like wishful thinking. To put it bluntly, the common mantra which much more reflects my experience with deep learning is this one: "garbage in, garbage out".

As mentioned at the beginning of the article, I like to think of the training data as your ceiling. It represents the best possible quality you can expect to produce from your model, which in most cases you will only be able to approach exponentially. That is why targeting the highest quality source data and preserving that quality through processing steps is so important.

In fact, I often find that as soon as you put animation data into a neural network in any form you can expect some loss in quality. We can see this by training a very simple auto-encoder on the high quality data from the Motorica dataset we showed before:

(In red is the ground truth and in green is the reproduction.)

Even after some careful training which accounts for errors propagating down the joint chain, we still get some error in the autoencoder's reconstructions on the training set which manifest in small amounts of foot sliding and other posing errors.

In this case I used 8 layers of 512 neurons for both the encoder and decoder and a 256-dimensional latent space. Perhaps my network was just not large enough? More layers generally equals better right? Well in this case the opposite is true. In fact, we can get almost perfect reproductions if we just use 2 layers instead of 8:

So not only can quality degrade as soon as we enter any kind of neural network, but it seems that the more layers that data passes through the worse things are.

All of these sources of error accumulate and propagate and obfuscate our ability to evaluate methods and architectural differences objectively and fairly. Methods that produce foot sliding on top of data (or other methods) that already have foot sliding, hide the foot sliding they introduce, and make the sources of error infinitely more difficult to decouple and attribute to specific methodological decisions.

Also, the models we train end up wasting their capacity learning to emulate the errors, noise, multiple-modes, artefacts, and whatever other undesirable things might be in the data. For example, here is the same 2 layer auto-encoder trained on of our AMASS yoga data:

Now all of the care we took to retain precision is working against us because we're attempting to reproduce the errors and noise. If we're not careful you can see how easy it would be to come to the wrong conclusion about model architectures. Even with this basic example we're in a situation where with good data quality we might conclude "more layers = worse", while with poor data quality "more layers = better" (as, for example, it might remove the noise more).

It might seem like I am nit-picking on tiny issues, and that errors of a couple of centimeters here are there are really just not a big deal compared to the new opportunities that Machine Learning opens up... but a lesson I think we can learn from the rendering community is that hacks, approximations, and other sources of errors might not seem like a big deal - until suddenly they are - because at some point you end up with a final result that looks like a hot mess - and you have no idea what to do - because you have hundreds of different sources of errors, none of which you really understand or can control - compounding together in a deeply complex way. That is the situation the rendering folks have been untying over the last 15 years.

Before Deep Learning

When talking about animation quality it's a good exercise to go back and look at SIGGRAPH papers from 10 years ago before deep learning started to take off. Here is a selection of my favorites:

Motion Fields for Interactive Character Animation (2010)

Continuous Character Control with Low-Dimensional Embeddings (2012)

Data-driven Finger Motion Synthesis for Gesturing Characters (2012)

Realtime Style Transfer for Unlabeled Heterogeneous Human Motion (2015)

Although all these methods have issues (and there were lots of papers from this time which did not look nearly as good), in many ways, in terms of pure animation quality what they produced is better than some of the results from deep learning methods we see now. In the very least, the animation they produce is almost always clean and artefact free: it does not have noise, or jumps and jitters, and in general it does not even contain much foot sliding.

If our newer methods cannot attain this quality bar then the elephant in the room is that we may not actually be making progress in the field - we might just be trading off one thing for another.

So let's talk more about animation quality - let's work out how to tackle it and not try push it under the rug because it is an inconvenient topic when it comes to publishing animation papers that use deep learning.

Improving Animation Quality

So what can we do to try and improve animation quality across the board?

I think those of us in the industry have some responsibility here. Almost all games and VFX companies don't share their data with the academic world (with the exception of Ubisoft who have released some very high quality animation datasets), so how can we penalize academics for not reaching a bar they don't even know exists? We need to make more of an effort to share what we can, not just our data, but also our processes and considerations when we capture it.

And because I believe in putting your money where your mouth is I've done a few things in preparation for this blog post that I hope will help:

1) I've skinned a simple character (to the best of my limited abilities) to the skeleton used in Ubisoft's ZeroEGGS dataset to provide a clean and clear way to evaluate overall motion quality including fingers, toes, hand and feet contacts.

2) I've spent some time re-solving in MotionBuilder Ubisoft's LaFAN dataset from the original marker data and preparing a new version which is better quality and closer to what we used internally: it has toe and finger motion (at least one marker's worth of finger motion), is more carefully retargeted to this new skeleton, and is solved at 60fps instead of 30fps. Given I'm not a MotionBuilder expert, and the limited finger markers in the original data, the result is still far from perfect - but I hope it can provide a reasonable baseline for researchers given the size and diversity of the dataset. You can find it here.

3) I've retargeted the Motorica Dance Dataset to this skeleton in MotionBuilder so that it can be combined with the previous two datasets. You can find it here.

4) I've also done a basic adjustment of the ZeroEGGS dataset so that it looks correct on this new simple character. You can find it here.

5) I've prepared a really basic example raylib application that can be used to view animation data from these datasets on a skinned character. You can find it here and a pure python version here.

That gives us at least three large datasets all on a common character skeleton with fingers, toes, and a skinned mesh, covering Dance, Speech, and everything in LaFAN. I hope that can be considered a pretty good starting point for anyone who is interested in improving the motion quality in their animation research.

Finally, if anyone else releases simliar quality datasets I promise I will also make a good attempt to retarget it to the same setup.

With that said, I think there are things to be done in the academic world too. We need to strike a balance between rewarding risk-taking, innovation, and unique ideas - but we also need to make sure we don't lower the bar to the point where our results become irrelevant to the industry or we lose the ability to evaluate what works and what doesn't. Most importantly: let us not avoid the discussion of animation quality just because it may be inconvenient for publishing.

Friendly Reminder: All of these datasets are NOT licensed for commercial use. They are for research use only. The quickest way to ruin any good-will from the industry in terms of releasing data is to use this data (or models trained on this data) in a commercial product without acquiring a commercial license from the original owners of the datasets.

Conclusion

I'd like to end on something more philosophical.

There has been a huge amount of change in the tech world over the last 10 years and almost all companies (including the one I work for) are shifting their focus away from being content creators, to being storefront owners and algorithmic content curators. The new business model is this: instead of employing the best artists and creators you can find to make content, you instead pay your users to create the content (be that apps, games, videos, recipes, whatever), and that content is then evaluated and filtered algorithmically for other users - not based on some subjective evaluation of quality - but based on how much it engagement it generates.

The automated, engagement based nature of this process has created a race to the bottom. YouTube users are creating hundreds of brain-hacking Spiderman-Elsa videos to farm views from undeveloped minds. Game developers are auto-generating thousands of gambling games for the Apple app store. Everything is being flooded with low-quality, engagement-hacking crap. The people who are actually trying to build quality content are being forced to sink or swim - optimize for engagement or else be forgotten.

There are many people involved in deep learning who are trying very hard to sell you the idea that in this new world of big-data, recommendation algorithms, large language models, and deep learning, quantity is the only thing that matters: more data, more GPUs, more papers, more students, more layers, more neurons, more users, more hours of engagement. Take a second to consider why it might be in the interests of the people shouting this the loudest, to convince everyone it is really true.

But ask almost anyone what they actually want in this world and they will say the opposite. They want less content, of higher quality.

And if that is what all of us want, then that is what we need to try and do: focus on producing quality content ourselves, and reward others when they do so too.